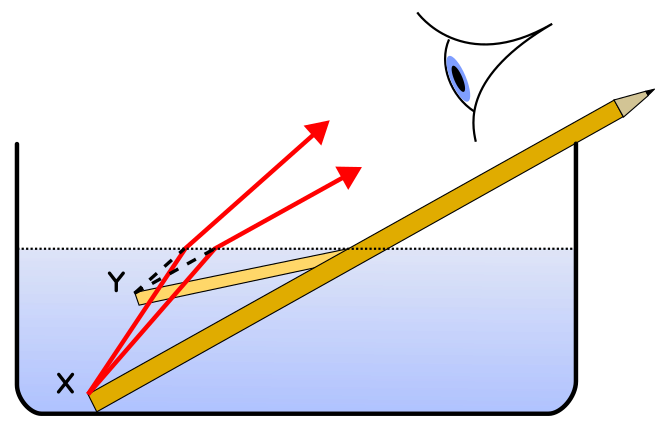

graphic of Plato's allegory of the cave: the things we see are only poor shadows of the perfect forms (credit: Wikimedia Commons)

(Only study of Philosophy enables us to become the toga-clad person near the top.)

In contrast to empiricism, rationalism has other problems,

especially with the Theory of Evolution.

For Plato, the whole idea of a canine genetic code

that contained the instructions for the making of an ideal dog would have

sounded appealing. Obviously, it must have come from the Good.

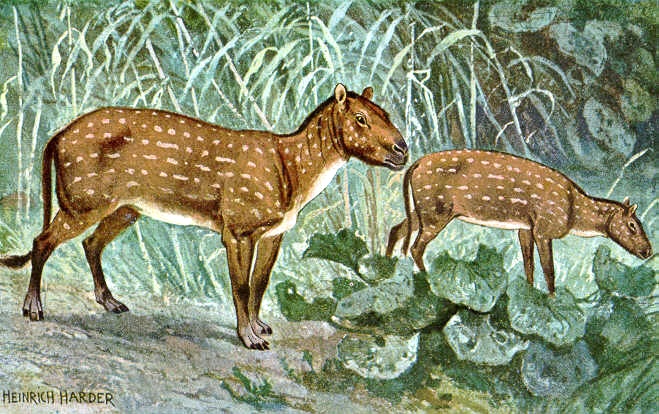

But Plato would have rejected the idea that back a few geological ages ago no dogs existed, while some other animals did exist that looked like dogs but were not imperfect copies of an ideal dog “form.” We know now these creatures can be more fruitfully thought of as excellent examples of canis lupus variabilis, another species entirely. All dogs, for Plato, should be seen as poor copies of the ideal dog that exists in the pure dimension of the Good. The fossil records in the rocks don’t so much cast doubt on Plato’s idealism as belie it altogether. Gradual, incremental change in all species? Plato, with his commitment to forms, would have confidently rejected the Theory of Evolution.

But Plato would have rejected the idea that back a few geological ages ago no dogs existed, while some other animals did exist that looked like dogs but were not imperfect copies of an ideal dog “form.” We know now these creatures can be more fruitfully thought of as excellent examples of canis lupus variabilis, another species entirely. All dogs, for Plato, should be seen as poor copies of the ideal dog that exists in the pure dimension of the Good. The fossil records in the rocks don’t so much cast doubt on Plato’s idealism as belie it altogether. Gradual, incremental change in all species? Plato, with his commitment to forms, would have confidently rejected the Theory of Evolution.

In the meantime, Descartes’s version of rationalism

would have had serious difficulties with the mentally challenged. Do they have

minds/souls or not? If they don’t get Math and Geometry, i.e. they don’t know

and can’t discuss “clear and distinct” ideas, are they human or are they mere

animals? And the abilities of the mentally challenged range from slightly below

normal to severely mentally handicapped. At what point on this continuum do we

cross the threshold between human and animal? Between the realm of the soul and

that of mere matter, in other words? Descartes’s ideas about what properties

make a human being human are disturbing. His ideas about how we can treat other

creatures are revolting.

To Descartes, animals didn’t have souls; therefore,

humans could do whatever they wished to them and not violate any of his moral

beliefs. In his own scientific work, he dissected dogs alive. Their screams

weren’t evidence of real pain, he claimed. They had no souls and thus could not

feel pain. The noise was like the ringing of an alarm clock—a mechanical sound,

nothing more. Generations of scientists after him performed similar acts: vivisection in the

name of Science.2

Would Descartes have stuck to his definition of

what makes a being morally considerable if he had known then what we know now

about the physiology of pain? Would Plato have kept preaching his form of

rationalism if he had suddenly been given access to the fossil records we have?

These are imponderable questions. It’s hard to imagine that either of them

would have been that stubborn. But the point is that they didn’t know then what

we know now.

In any case, after considering some likely rationalist responses to the test situations described in this chapter, it is certainly reasonable for us to conclude that rationalism’s way of portraying what human minds do is simply mistaken. That’s not how we should picture what thinking is and how thinking is best done because the rationalist model doesn’t fit what we really do.

In any case, after considering some likely rationalist responses to the test situations described in this chapter, it is certainly reasonable for us to conclude that rationalism’s way of portraying what human minds do is simply mistaken. That’s not how we should picture what thinking is and how thinking is best done because the rationalist model doesn’t fit what we really do.

And now, we can simply put aside our regrets about both

the rationalists and the empiricists and the inadequacies of their ways of

looking at the world. We are ready to get back to Bayesianism.

Notes

1.

Bayes’ Formula, Cornell University website, Department of Mathematics. Accessed

April 6, 2015. http://www.math.cornell.edu/~mec/2008-2009/

TianyiZheng/Bayes.html.

2. Richard

Dawkins, “Richard Dawkins on Vivisection: ‘But Can They Suffer?’” BoingBoing blog, June 30, 2011. http://boingboing.net/2011/06/30/richard-dawkins-on-v.html.