Chapter

5 The

Joys and Woes of Empiricism

John

Locke, empiricist philosopher (credit: Wikimedia Commons)

David

Hume, empiricist philosopher (credit: Wikimedia Commons)

Empiricism is a way of thinking about

thinking and what we mean when we say we “know” something. It is the logical base

of Science, and it claims it begins only from sense data, i.e. what we touch,

see, hear, taste, and smell.

Empiricism assumes that all we know is

sensory experiences and memories of them. This includes even the concepts that

enable us to sort and save those experiences and memories, plan responses to

events in the world, and then enact the plans. For empiricists, concepts are labels

for bunches of memories that we think look alike. Concepts enable us to sort

through, and respond to, real life events. We keep and use those concepts that

have reliably guided us in the past to less pain and more joy. We drop ones

that have proved useless.

According to Empiricism, our sense organs

are continually feeding bits of information into our minds about the sizes, textures,

colours, shapes, sounds, aromas, and flavors of things we encounter. Even when

we are not consciously paying attention, at other, deeper levels our minds are taking

in these details. “The eye – it cannot choose but see. We cannot bid the ear be

still. Our bodies feel where’er they be, against or with our will.”

(Wordsworth)

For example, I know when I hear noises

outside of a car approaching or a dog barking. Even in my sleep, I detect

gravel crunching sounds in the driveway. One spouse awakes to the baby’s

crying; the other dozes on. One wakes when the furnace is not cutting out as it

should; the other sleeps. The ship’s engineer sleeps through steam turbines

roaring and props churning, but she wakes up when one bearing begins to hum a

bit above its normal pitch. She wakes up because she knows something

is wrong. A bearing is running hot. Empiricism is a modern way of understanding

our complex information-processing system – the human body, its brain, and the

mind that brain holds.

In the Empiricist model, the mind notices

how certain patterns of details keep recurring in some situations. When we

notice a pattern of details in encounter after encounter with a familiar

situation or object, we make mental files – for example, for round things, red things,

sweet things, or crisp things. We then save the information about that type of

object in our memories. The next time we encounter an object of that type, we

simply go to our memory files. There, by cross-referencing, we get: “Fruit.

Good to eat.” Empiricists say all general concepts are built up in this way.

Store, review, hypothesize, test, label, repeat.

Scientists now believe this Empiricist

model is only part of the full picture. In fact, most of the concepts we use to

recognize and respond to reality are not learned by each of us on our own, but

instead are concepts we were taught as children. Our childhood programming

teaches us how to cognize things. After that, almost always, we don’t cognize

things, only recognize them. (We will explore why our parents and

teachers program us in the ways that they do in upcoming chapters.) Also note

that when we encounter a thing that doesn’t fit any of our familiar concepts,

we grow wary. (“What’s that?! Stay back!”)

But, empiricists claim that all human

thinking and knowing happens in the experienced-based way. Watch the world.

Notice patterns that repeat. Create labels (concepts) for the patterns that you

keep encountering, especially those that signify hazard or opportunity. Store

them up in memories. Pull the concepts out when they fit, then use them to deal

with life events. Remember what works. Keep trying.

For individuals and nations, according to

the empiricists, that’s how life goes. And the most effective way of life for

us, the way that makes this common-sense process rigorous, and that keeps

getting good results, is Science.

There are arguments against the empiricist

way of thinking about thinking and its model of how human thinking and knowing

work. Empiricism is a way of seeing ourselves and our minds that sounds

logical, but it has its problems.

Child sensing her world (credit: Sheila Brown; Public Domain Pictures)

Since Locke, critics of Empiricism (and

Science) have asked, “When a human sees things in the real world and spots

patterns in the events going on there, what is doing the spotting? The

human mind and the sense data-processing programs it must already contain to be

able to do the tricks empiricists describe obviously came before any sense-data

processing could be done. What is this equipment, and how does it work?”

Philosophers of Science have trouble explaining what this “mind” that does the

“knowing” is.

Consider what Science is aiming to

achieve. What scientists want to discover, come to understand, and then use in

the real world are what are usually called “laws of nature”. Scientists do more

than just observe the events in physical reality. They also strive to

understand how these events come about and then to express what they understand

in general statements about these events, in mathematical formulas, chemical

formulas, or rigorously logical sentences in one of the world’s languages. Or,

in some other system used by people for representing their thoughts. (A

computer language might do.) A natural law statement is a claim about how some

part of the world works. A statement of any kind – if it is to be considered

scientific – must be expressed in a way that can be tested in the real,

physical world.

Put another way, if a claim about a newly

discovered real-world truth is going to be worth considering, to be of any

practical use whatever, we must be able to state it in some language that

humans use to communicate ideas to other humans, for example, mathematics or

one of our species’ natural languages: English, Russian, Chinese, etc. A theory

that can be expressed only inside the head of its inventor will die with her or

him.

Consider an example. The following is a

verbal statement of Newton’s law of universal gravitation: “Any two bodies in

the universe attract each other with a force that is directly proportional to

the product of their masses and inversely proportional to the square of the

distance between them.”

The mathematical formula expressing the same law is:

Now consider another example of a

generalization about human experience:

Pythagoras' Theorem illustrated (credit: Wikimedia)

In plain English, this formula says: “the

square on the hypotenuse of a right triangle is equal to the sum of the squares

on the two adjacent sides”.

The Pythagorean Theorem is a mathematical

law, but is it a scientific one? In other words, can it be tested in some

unshakable way in the physical world? (Can one measure the sides and know the

measures are perfectly accurate?)

The harder problem occurs when we try to

analyze how true statements like Newton’s Laws of Motion or Darwin’s Theory of

Evolution are. These claim to be laws about things we can observe with our

senses, not things that may exist – and be true – only in the mind (like

Pythagoras’ Theorem).

Do statements of these laws express

unshakable truths about the real world or are they just temporarily useful

ways of roughly describing what appears to be

going on in reality – ways of thinking that are followed for a few decades

while the laws appear to work for scientists, but that then are seriously revised

or even dropped when we encounter new problems that the law can’t explain?

Many theories in the last 400 years have

been revised or dropped totally. Do we dare to say about any natural law

statement that it is true in the way in which “5 + 7 = 12” is true or the

Pythagorean Theorem is true?

This debate is a hot one in Philosophy,

even in our time. Many philosophers of Science claim natural law statements,

once they’re supported by enough experimental evidence, can be considered to be

true in the same way as valid mathematical theorems are. But there are also

many who say the opposite – that all scientific statements are tentative. These

people believe that, over time, all natural law statements get replaced by new

statements based on new evidence and new models or theories (as, for example,

Einstein's Theory of Relativity replaced Newton's Laws of Motion and Gravitation).

If all natural law statements are seen as

being, at best, only temporarily true, then Science can be seen as a kind of

fashion show whose ideas have a bit more shelf life than the fads in the usual

parade of TV shows, songs, clothes, makeup, and hairdos. In short, Science’s

law statements are just narratives, not true so much as useful, but useful only

in the lands in which they gain some currency and only for limited time periods

at best.

The logical flaws that can be found in

empiricist reasoning aren’t small ones. One major problem is that we can’t know

for certain that any of the laws we think we see in nature are true because

even the terms that we use when we make a scientific law statement are

vulnerable to attack by the skeptics.

When we state a natural law, the terms we

use to name the objects and events we want to focus on exist, the skeptics

argue, only in our minds. Even what makes a thing a “tree”, for example,

is dubious. In the real world, there are no trees. We just use the word “tree”

as a convenient label for some of the things we encounter in our world and for

our memories of them.

A simple statement that seems to us to

make sense, like the one that says hot objects will cause us pain if we touch

them, can’t be trusted in any ultimate sense. To assume this “law” is true is

to assume that our definitions for the terms hot and pain will

still make sense in the future. But we can’t know that. We haven’t seen the future.

Maybe, one day, people won’t feel pain.

Thus, all the terms in natural law

statements, even ones like force, atom, acid, gene, proton,

cell, organism, etc. are labels created in our minds because

they help us to sort and categorize sensory experiences and memories of those

experiences, and then talk to one another about what seems to be going on

around us. But reality does not contain things that somehow fit terms like “gene”

or “galaxy”. Giant ferns of a bygone geological age were not trees. But they

would have looked like trees to most people from our time who use the word “tree”.

How is a willow bush a bush, but not a tree? If you look through a powerful

microscope at a gene, it won’t be wearing a tag that reads “gene.”

In other languages, there are other terms,

some of which overlap in the minds of the speakers of that language with things

that English has a different word for entirely. In Somali, a gene is called

“hiddo”. And the confusions get even trickier. German contains two verbs for

the English word “know”, as does French. Spanish contains two words for

the English verb “be”.

We divide up and label our memories of

what we see in reality in whatever ways have worked reliably for us and our

ancestors in the past. And even how we see simple things is determined by what

we've been taught by our elders. In English, we have seven words for the colors

of the rainbow; in some other languages, there are as few as four words for all

the spectrum’s colors.

Right from the start, our natural law

statements gamble on the future validity of our human-invented terms for

things. The terms can seem solid, but they are still gambles. Some terms humans

once confidently gambled on turned out later, in light of new evidence, to be

naïve and

inadequate.

Isaac Newton (artist: Godfrey Kneller) (credit: Wikimedia Commons)

Newton’s laws of motion are now seen by physicists

as being approximations of the relativistic laws described by Einstein.

Newton’s terms body, space, and force once seemed

self-evident. But it turned out that space is not what Newton assumed it to be.

A substance called phlogiston once

seemed to explain all of Chemistry. Then Lavoisier did experiments which showed

that phlogiston doesn’t exist.

On the other hand, people spoke of genes

long before microscopes that could reveal them to the human eye were invented,

and people still speak of atoms, even though nobody has ever seen one. Some

terms last because they enable us to build mental models and do experiments

that get results we can predict. For now. But the list of scientific theories

that “fell from fashion” is long.

Chemists Antoine and Marie-Anne Lavoisier

(credit:

Wikimedia Commons)

Various further attempts have been made in

the last 100 years to nail down what Science does and to prove that it is a

reliable way to truth, but they have all come with conundrums of their own.

Now, while the problems described so far bother philosophers of

Science a lot, such problems are of little interest to the majority of

scientists themselves. They see the law-like statements they and their

colleagues try to formulate as being testable in only one meaningful way,

namely, by the results shown in replicable experiments done in the lab or in

the field. Thus, when scientists want to talk about what “knowing” is, they

look for models not in Philosophy, but in the branches of Science that study

human thinking, like neurology for example. However, efforts to find proof in

neurology that Empiricism is logically solid also run into problems.

The early empiricist John Locke basically

dodged the problem when he defined the human mind as a “blank slate” and saw

its abilities to perceive and reason as being due to its two “fountains of

knowledge,” sensation and reflection. Sensation, he said, is made up of current

sensory experiences and current reviews of categories of past experiences.

Reflection is made up of the “ideas the mind gets by reflecting on its own

operations within itself.” How these kinds of “operations” got into human

consciousness and what is doing the “reflecting” that he is talking about, he

doesn’t say.1

Modern empiricists, both philosophers of

Science and scientists themselves, don’t like their forebears giving in to even

this much mystery. They want to get to definitions of what knowledge is that

are solidly based in evidence.

Neuroscientists who aim to figure out what

the mind is and how it thinks do not study words. They study physical things,

like electro-encephalographs of the brains of people working on assigned

tasks.

For today’s scientists, philosophical discussions

about what knowing is are just words chasing words. Such discussions can’t

bring us any closer to understanding what knowing is. In fact, scientists don’t

respect discussions about anything we may want to study unless those

discussions are based on a model that can be tested in the real world.

Scientific research, to qualify as

“scientific”, must also be designed so it can be replicated by any

researcher in any land or era. Otherwise, it’s not credible; it could be a

coincidence, a mistake, wishful thinking, or simply a lie. Thus, for modern

scientists, analysis of physical evidence is the only means by which they can

come to understand anything, even when the thing they are studying is what’s

happening in their brains while they are studying those brains.

The researcher sees a phenomenon in

reality, gets an idea about how it works, then designs experiments that will

test his theory. The researcher then does the tests, records the results, and

reports them. The aim of the process is to arrive at statements about reality

that will help to guide future research onto fruitful paths and will enable other

scientists to build technologies that are increasingly effective at predicting

and manipulating events in the real world.

For example, electro-chemical pathways

among the neurons of the brain can be studied in labs and correlated with

subjects’ descriptions of their actions. (The state of research in this field

is described by Delany in an article that is available online and also by

Revonsuo in Neural Correlates of Consciousness: Empirical and

Conceptual Questions, edited by Thomas Metzinger.2,3)

Observable things are the things scientists

care about. The philosophers’ talk about what thinking and knowing are is just

that – talk.

As an acceptable alternative to the study

of brain structure and chemistry, scientists interested in thought also study

patterns of behavior in organisms like rats, birds, and people, behavior

patterns elicited in controlled, replicable ways. We can, for example, try to

train rats to work for wages. This kind of study is the focus of Behavioural Psychology.

(See William Baum’s 2004 book Understanding Behaviorism.4)

As a third alternative, we can even try to

program computers to do things as similar as possible to things humans do. Play

chess. Write poetry. Cook meals. If the computers then behave in human-like

ways, we should be able to infer some testable theories about what thinking and

knowing are. This research is done in a branch of Computer Science called

“Artificial Intelligence” or “AI”.

Many empiricist philosophers see AI as our

best hope for defining, once and for all, what human thinking is. AI offers a

model of our own thinking that will explain it in ways that can be tested. A

program written to simulate thinking either runs or it doesn’t, and every line

in it can be examined. When we can write programs that make computers converse

with us so well that, when we talk to them, we can’t tell whether we’re talking

to a human or a computer, we will have encoded what thinking is. Set it down in

terms programmers can explain with algorithms. Run the program over and over and

observe what it does.

With the rise of AI, cognitive scientists

felt that they had a real chance of finding a model of thinking that worked,

one beyond the challenges of the critics with their counterexamples. (A

layman’s view on how AI is doing can be found in Thomas Meltzer’s article

in The Guardian, 17/4/2012.5)

Testability in physical reality and

replicability of the tests, I repeat, are the characteristics of modern Empiricism

(and of all Science). All else, to modern empiricists, has as much reality and

as much reliability to it as creatures in a fantasy novel. Ents. Orcs. Sandworms.

Amusing daydreams, nothing more.

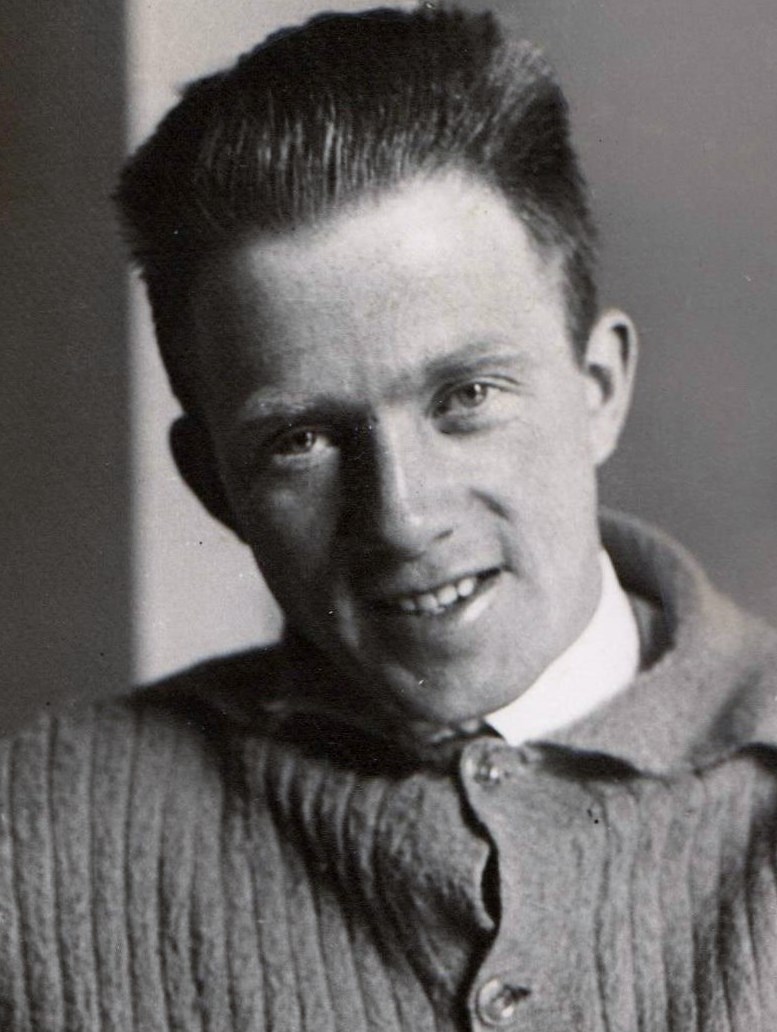

Kurt Gödel (credit:

Wikimedia Commons)

For years, the most optimistic of the

empiricists looked to AI for models of thinking that would work in the real

world. Their position has been cut down in several ways since those early days.

What exploded it for many was the proof found by Kurt Gödel, Einstein’s

companion during his lunch hour walks at Princeton. Gödel showed that no

rigorous system of symbols for expressing human thinking can be a complete

system. Thus, no system of computer coding can ever be made so that it can

adequately refer to itself. (In Gödel’s proof, the ideas analyzed were basic

axioms in Arithmetic.) Gödel’s proof is difficult for laypersons to follow, but

non-mathematicians don’t need to be able to do formal logic in order to grasp

what his proof implies about everyday thinking. (See Hofstadter for an

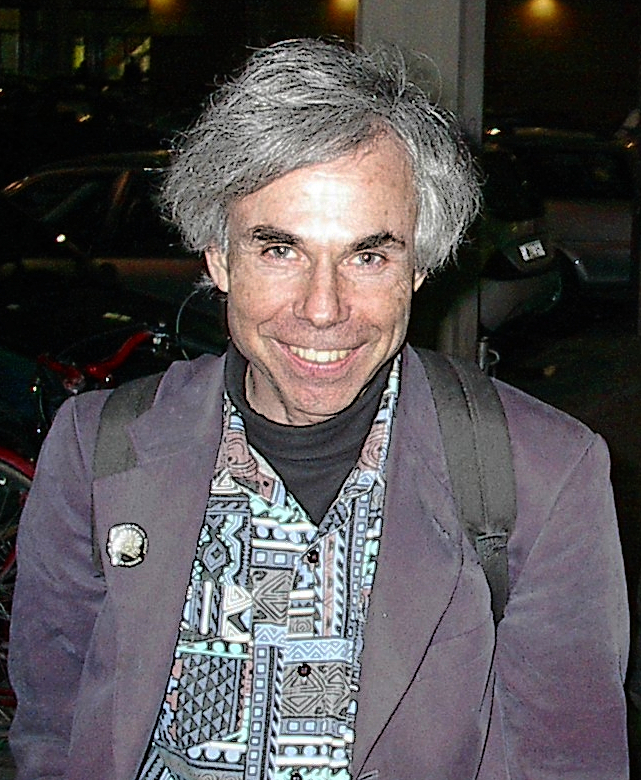

accessible critique of Gödel.6)

Douglas Hofstadter (credit:

Wikipedia)

If we take what it says about Arithmetic

and extend that finding to all kinds of thinking, Gödel’s proof says no symbol system

for expressing our thoughts will ever be powerful enough to enable us to

express all the thoughts about thoughts that human minds can dream up. In

principle, there can’t be such a system. In short, what a human programmer

does as she fixes flaws in her programs is not

programmable.

What Gödel’s proof implies is that no way

of modelling the human mind will ever adequately explain what it does. Not in

English, Logic, French, Russian, Chinese, Java, C++, or Martian. We will always

be able to generate thoughts, questions, and statements that we can’t express

in any one symbol system. If we find a system that can be used to express some

of our ideas really well, we discover that no matter how well the system is

designed, no matter how large or subtle it is, we have other thoughts that we

can’t express in that system at all. Yet we must make statements that at least

attempt to communicate all our ideas. Science is social. It has to be shared in

order to advance.

Other theorems in Computer Science offer

support for Gödel’s theorem. For example, in the early days of the development

of computers, programmers were frequently creating programs with “loops” in

them. After a program had been written, when it was run, it would sometimes

become stuck in a subroutine that would repeat a sequence of steps from, say,

line 79 to line 511 then back to line 79, again and again. Whenever a program

contained this kind of flaw, a human being had to stop the computer, go over

the program, find why the loop was occurring, then either rewrite the loop or

write around it. The work was frustrating and time consuming.

Soon, a few programmers got the idea of

writing a kind of meta-program they hoped would act as a check. It would scan

other programs, find their loops, and fix them, or at least point them out to

programmers so they could fix them. The programmers knew that writing a check

program would be hard, but once it was written, it would save many people a

great deal of time.

However, progress on the writing of this

check program met with problem after problem. Eventually, Turing published a

proof showing that writing a check program isn’t possible. A foolproof

algorithm for finding loops in other algorithms is, in principle, impossible.

(Wikipedia, “Halting Problem”.7) This finding in Computer

Science, the science many see as our bridge between the abstractness of

thinking and the concreteness of material reality, is Gödel all over

again. It confirms our deepest feelings about Empiricism. Empiricism is useful,

but it is doomed to remain incomplete. It can’t explain itself.

Arguments and counterarguments on this

topic are fascinating, but for our purposes in trying to find a base for a

philosophical system and a moral code, the conclusion is much simpler. The more

we study both theoretical models and real-world evidence, including evidence

from Science itself, the more we are driven to conclude that the empiricist way

of understanding what thinking is will probably never explain its own method of

reaching that understanding. Empiricism’s own methods have ruled out the

possibility of it being a base for epistemology. (What is the meaning of meaning?)

(Solve x2 + 1 = 0).

My last few paragraphs describe only the

dead ends that have been hit in AI. Other sciences searching for this same holy

grail – a clear, evidence-backed model of human thinking – haven’t fared any

better. Neurophysiology and Behavioural Psychology also keep striking out.

If a neurophysiologist could set up an MRI

or similar imaging device and use his model of thinking to predict which

networks of neurons in his brain would be active when he turned the device on

and studied pictures of his own brain activities, then he could say he had set

down a reliable working model of what consciousness is. ("Those are my

thoughts: those neuron firings right there.") But neuroscience is not even

close to being that complete.

Patterns of neuron firings mapped on one

occasion when a subject performs even a simple task can’t be counted on. We

find different patterns every time we look. A human brain contains a hundred

billion neurons, each one capable of connecting to as many as ten thousand

others. Infinite possibilities. And the patterns of firings in that brain are changing

all the time. Philosophers who seek a base for Empiricism strike out if they look

for it in Neurophysiology.8

Diagram of a

Skinner Box (credit: Wikimedia Commons)

Problems similar to those in AI and

Neurophysiology also beset Behavioral Psychology. Researchers can train rats,

pigeons, or other animals and predict what they will do in controlled

experiments, but when a behaviorist tries to give behaviorist explanations for

what humans do, many exceptions have to be made. A claim like: "There's

the mind: a set of behaviors we can replicate at any time." isn't even

close for Behavioral Psychology yet.

In a simple example, alcoholics who say

they truly want to get sober for good can be given a drug that makes them

violently ill if they imbibe even small amounts of alcohol, but that does not

affect them as long as they do not drink alcohol. This would seem to be a

behaviourist’s solution to alcoholism, one of society’s most painful problems.

But it doesn’t work. Thousands of alcoholics in early studies kept their

self-destructive ways while on disulfiram.9 What is going on in

these cases is obviously much more complex than Behaviorism can account for.

And this is but one commonplace example.

I am not disappointed to learn that humans

turn out to be complex, evolving, and impossible to pin down, no matter the

model we analyze them under.

At present, it appears that Science can’t

provide a rationale for itself in theory and can’t demonstrate the reliability

of its methods in practice. Could it be another set of temporarily effective

illusions, like Christianity, Communism, or Nazism once were? Personally, I

don’t think so. The number of Science’s achievements and their profound effects

on our society argue powerfully that Science works in some profound way, even

though it can’t explain itself.

Do Science’s laws sometimes fail glaringly

in the real world? Yes. Absolutely. Newton’s Laws of Motion turned out to be

inadequate for explaining data drawn from more advanced observations of

reality. The mid-1800's brought better views of the universe provided by better

telescopes. These led Physics past Newton’s laws, and on to the Theory of

Relativity. Newton’s picture turned out to be too simple, though it was useful

on the everyday scale.

Thus, considering how revered Newton’s

model of the cosmos once was and knowing that it gives only a partial, inadequate

picture of the universe can cause philosophers – and ordinary folk – to doubt

Science. We then question whether Empiricism can be trusted as a base to help

us design a new moral code. Our survival is at stake. Science can’t

even explain its own thinking.

As we seek to build a moral system we can

all live by, we must look for a way of thinking about thinking based on

stronger logic, a way of thinking about thinking that we can believe in. We

need a new model, built around a core philosophy that is different from

Empiricism, not just in degree but in kind.

Empiricism’s disciples have achieved some

impressive results in the practical sphere, but then again, for a while in

their heydays, so did Christianity, Communism, and Nazism. They even had their

own “sciences,” dictating in detail what their scientists should study and what

they should conclude.

Perhaps the most disturbing example of a

worldview that seemed to work very well for a while is Nazism. The Nazis

claimed to base their ideology on Empiricism and Science. In their propaganda

films and in all academic and public discourse, they preached a warped form of

Darwinian evolution that enjoined and exhorted all nations, German or

non-German, to go to war, seize territory, and exterminate or enslave all

competitors – if they could. They claimed this was the way of the real world.

Hitler and his cronies were gambling confidently that in this struggle, those

that they called the “Aryans” – with the Germans in the front ranks – would

win.

Nazi leader Adolf

Hitler (credit: Wikimedia Commons)

“In eternal warfare, mankind has become

great; in eternal peace, mankind would be

ruined.” (Mein

Kampf)

Such a view of human existence, they

claimed, was not cruel or cynical. It was a mature, realistic acceptance of the

truth. If people calmly and clearly look at the evidence of History, they can

see that war always comes. Mature, realistic adults, the Nazis claimed, learn

and practice the arts of war, assiduously in times of peace and ruthlessly in

times of war. According to the Nazis, this was merely a logical consequence of

accepting that the survival-of-the-fittest rule governs all life, including

human life.

Hitler’s ideas about race and about how

Darwinian evolution could be applied to humans, were, in the real science of

Genetics, unsupported. Hitler’s views of “race” were silly. But in the Third

Reich, this was never acknowledged.

Werner Heisenberg (credit: Wikimedia

Commons)

And for a while, Nazism worked. The Nazi

regime rebuilt what had been a shattered Germany. But the sad thing about the

way smart men like physicist Werner Heisenberg, biologist Ernst Lehmann, and

chemist Otto Hahn became tools of Nazism is not that they became its tools. The

really disturbing thing is that their worldviews as scientists did not equip

them to break free of the Nazis’ distorted version of Science. As I pointed out

earlier, their religion failed them. But Science failed them too.

Otto Hahn (credit: Wikimedia Commons)

There is certainly evidence in human

history that the consequences of Science being misused can be horrible. Nazism

became humanity’s nightmare. Some of its worst atrocities were committed in the

name of Science.10 Under Nazism, medical experiments especially

passed all nightmares.

For practical, evidence-based reasons,

then, as well as for theoretical ones, millions of people around the world

today have become deeply skeptical about all systems of thought and, in moral

matters, about the moral usefulness of Science in particular. At deep levels,

we are driven to wonder: Should we trust something as critical as the survival

of our culture and our grandchildren, even our Science itself, to a way of

thinking that, first, can’t explain itself, and second, has had horrible, practical

failures in the past? Science can put men on the moon, grow crops, and cure

diseases. But as a moral guide, even for its own activities, so far it looks

very unreliable.

In the meantime, we must get on with

trying to build a universal moral code. Reality won’t let us procrastinate. It

forces us to think, choose, and act every day. To do these things well, we need

a comprehensive moral guide, one that we can refer to in daily life as we

observe our world and choose our actions.

As a base for that guide, Empiricism looks

unreliable. Is there something else to which we might turn?

Notes

1. John Locke, An Essay Concerning

Human Understanding (Glasgow: William Collins, Sons and Co., 1964), p.

90.

2. Donelson E. Delany, “What Should Be the

Roles of Conscious States and Brain States in Theories of Mental Activity?”

PMC Mens Sana Monographs 9, No. 1 (2011): 93–112.http://www.ncbi.nlm.nih.gov/pmc/articles/PMC3115306/.

3. Antti Revonsuo, “Prospects for a

Scientific Research Program on Consciousness,” in Neural Correlates of

Consciousness: Empirical and Conceptual Questions, ed. Thomas Metzinger

(Cambridge, MA, & London, UK: The MIT Press, 2000), pp. 57–76.

4. William Baum, Understanding

Behaviorism: Behavior, Culture, and Evolution (Malden, MA: Blackwell

Publishing, 2005).

5. Tom Meltzer, “Alan Turing’s Legacy: How

Close Are We to ‘Thinking’ Machines?” The Guardian, June 17, 2012.

http://www.theguardian.com/technology/2012/jun/17/alan-turings-legacy-thinking-machines.

6. Douglas R. Hofstadter, Gödel,

Escher, Bach: An Eternal Golden Braid (New York, NY: Basic Books,

1999).

7. “Halting Problem,” Wikipedia, the Free Encyclopedia. Accessed April 1, 2015. http://en.wikipedia.org/wiki/Halting_problem.

8. Alva Noë and Evan Thompson, “Are There

Neural Correlates of Consciousness?” Journal of Consciousness Studies 11,

No. 1 (2004), pp. 3–28.

http://selfpace.uconn.edu/class/ccs/NoeThompson2004AreThereNccs.pdf.

9. Richard K. Fuller and Enoch Gordis,

“Does Disulfiram Have a Role in Alcoholism Treatment Today?”Addiction 99,

No. 1 (Jan. 2004), pp. 21–24.

http://onlinelibrary.wiley.com/doi/10.1111/j.1360-0443.2004.00597.x/full.

10. “Nazi Human Experimentation,” Wikipedia, the Free Encyclopedia.

Accessed April 1, 2015.

http://en.wikipedia.org/wiki/Nazi_human_experimentation.

No comments:

Post a Comment

What are your thoughts now? Comment and I will reply. I promise.