Chapter

10 The

Second Attack on Bayesianism and a Response to it

A number of math formulas have been

devised to represent the Bayesian way of explaining how we learn, and then know,

a new theory of reality. They look complicated, but they really aren’t that

hard. I have chosen one of the more intuitive ones to use in my discussion of

the main theoretical flaw that critics think they see in Bayesian Confirmation

Theory.

The Bayesian model of how a human’s way of

thinking evolves can be broken down into a few basic parts. When I, a typical

human, examine a new way of explaining what I see going on in the world – a

theory – I try to judge how true and useful a picture of the world this new

theory may give me. I look for ways to test it, ways that will show me whether

this new theory of reality will help me to get reliable, positive, useful results in

the real world. I want to understand events in my world better and respond to

them more effectively.

For Bayesians, any theory worth

investigating always enables us to form specific hypotheses that can be tested

in the real world. I can’t test the Theory of Gravitation by moving the moon

around, but I can drop balls from towers here on Earth to see whether they take

as long to fall as the theory says they will. I can’t watch the evolution of

the living world for three billion years, but I can observe a particular insect

species that is being sprayed with a new pesticide every week and predict,

based on the Theory of Evolution, that these insects will be immune to the

pesticide by the end of this summer.

When I encounter a real-world situation

that will let me formulate a specific hypothesis based on the theory, and then I

test that hypothesis, I tend to lean more toward believing the hypothesis and

the theory underlying it if it enables me to

make accurate predictions. I lean toward discarding it if the

predictions it leads me to make keep turning out wrong. I am especially

inclined to believe the theory if all my other theories are silent or

inaccurate when it comes to predicting and explaining the test results.

In short, I tend to believe a new idea

more and more if it successfully explains what I see. This model of how we learn

and come to know new ideas can be expressed in a math formula.

Let Pr(H/B) be the probability

Pr I assign to a hypothesis H based just on

the background beliefs B that I had before I considered this new

hypothesis. If the hypothesis seems far-fetched to me, this term will be small,

maybe under 1%.

Now, let Pr(E/B) be

the degree to which I expected to see the evidence E based only on

my old familiar background ideas B about how reality works. If

I am about to see an event that I will find hard to believe, then my expectation

before that event Pr(E/B) will also be low, again likely under 1%.

Note that these terms are not fractions.

The forward slash in the way they’re written is not being used in its usual way.

The term Pr(H/B) is called my prior confidence in

the hypothesis. It stands for my estimate of the probability that the

hypothesis is correct if I base that estimate only on how

well the hypothesis fits my familiar old set of background assumptions about

reality. It doesn’t say anything like “hypothesis divided by background”.

The term Pr(E/H&B) is

my estimate of the probability that the evidence will happen if I assume –

just for the sake of this term – that my background assumptions and

this new hypothesis are both true, i.e. if for a short while I

try to think as if the hypothesis, along with the theory it comes from, is right.

The most important part of the equation

is Pr(H/E&B). It represents how much I am starting to believe

that the hypothesis H must be right, now that I’ve seen this

new evidence, all the while assuming the evidence E is as I saw it, not a trick

of some kind, and the rest of my old beliefs B are still in place.

Thus, the whole probability formula that

describes this relationship can be expressed in the following

way:

Pr(H/E&B) = Pr(E/H&B) X Pr(H/B)

Pr(E/B)

While this formula looks daunting, it

actually says something fairly simple. A new hypothesis/theory that I am trying

to understand seems more likely to be correct the more I keep encountering

evidence that the hypothesis can predict and that my old models of reality

can’t predict. When I set the values of these terms – as probabilities expressed

by percentages – I will assume, for the time being, that the evidence E

is as I saw it, not a mistake or trick, and that I still accept the rest of my

background ideas B about reality as being valid, which I have

to do if I am to make sense of what I see in my surroundings at all.

I more and more tend to believe a

hypothesis is true the bigger Pr(E/H&B) gets and the smaller Pr(E/B) gets.

In other words, I increasingly tend to

believe that a new way of explaining the world is true the more it works to

explain evidence I keep encountering in real world experiments and studies, and

the less I can explain that evidence if I don’t accept the new hypothesis.

So far, so good.

Now, all of this may begin to seem

intuitive, but once we have a formula set down it is open to attack; the

critics of Bayesianism see a flaw in it that they consider fatal. The flaw they

see is called “the problem of old evidence”.

One of the ways a new hypothesis/theory

gets more respect among experts in the field the hypothesis covers is by its

ability to explain old evidence that old theories in the field have so far been

unable to explain. For example, physicists all over the world felt that the

probability that Einstein’s Theory of Relativity was correct took a huge jump

upward when he used his theory to account for the gradual changes in the orbit

of the planet Mercury – changes that were familiar to physicists, but that had

defied explanation by the old Newtonian model of the universe and the equations

it offers.

The constant shift in Mercury’s orbit had

baffled astronomers since they had first acquired telescopes that enabled them

to detect that shift. The shift could not be explained by any pre-Relativity

models. But Relativity Theory could explain this shift and make extremely

accurate predictions about it.

Other examples of theories that worked to

explain old evidence in many other branches of Science could easily be listed.

Kuhn gives lots of them.1

What is wrong with Bayesianism, according

to its critics, is that it can’t explain why we give more credence to a theory

when we realize it can be used to explain unexplained old evidence. Critics say

when the formula above is applied in this situation, Pr(E/B) has to

be considered equal to 100% (absolute certainty) because the old evidence has

been seen so many times by so many.

For the same reasons, Pr(E/H&B) has

to be thought of as equal to 100 percent again because the evidence has been

reliably observed and recorded many times – since long before we ever had this

new theory/hypothesis to consider.

When these two 100% probabilities are put

into the equation, it becomes this:

Pr(H/E&B)

= Pr(H/B)

This new version of the formula emerges

because Pr(E/B) and Pr(E/H&B) are now both equal to 100

percent, or a probability of 1.0, and thus they can be cancelled out of the

equation.

But that means when I realize this new

theory that I’m considering can be used to explain some nagging old problems in

my field, my confidence in the new theory does not rise at all. Or to put the

matter another way, after seeing the new theory explain some troubling old

evidence, I trust the theory not one jot more than I did before I realized it

might explain that old evidence.

This is simply not what happens in real

life. When we realize that a new model or theory that we are considering can be

used to explain some old evidence that previously had not been explainable, we

are definitely more inclined to believe that the new theory is correct.

Proving Germ Theory

Pasteur in his

laboratory (artist: Eldelfeldt) (credit: Wikimedia Commons)

An indifferent reaction to a new theory’s

being able to explain confusing old evidence is simply not what happens in real

life. When physicists around the world realized that the Theory of Relativity

could be used to explain the shift in the orbit of Mercury, their confidence

that the theory was correct shot up. Most humans are not just persuaded but

exhilarated when a new theory they are beginning to understand gives them

solutions to unsolved old problems.

Hence, the critics say, Bayesianism is

obviously not adequate as a way of describing human thinking. It can’t

account for some of the ways of thinking that we know we use. We do indeed test

new theories against puzzling, old evidence all the time, and we do feel much

more impressed with a new theory if it can account for that same evidence when

all the old theories can’t.

The response in defense of Bayesianism is

complex, but not that complex. What the critics seem not to grasp is the spirit of

Bayesianism. In the Bayesian way of seeing reality and our relationship to it,

everything in the human mind is morphing and floating. The Bayesian picture of

the mind sees us as testing, reassessing, and updating all our ways of

understanding reality all the time.

In the formula above, the term for my

degree of confidence in the evidence, when I take only my background beliefs as

true – i.e. Pr(E/B) – is never 100%. Not even for

very familiar old evidence. Nor is the term for my degree of confidence in the

evidence if I include the hypothesis in my set of mental assumptions – Pr(E/H&B) –

ever equal to 100%. I am never perfectly certain of anything, not of my

background assumptions and not even physical evidence I have seen repeatedly

with my own eyes.

To closely consider this situation in

which a hypothesis is used to try to explain old evidence, we need to examine

the kinds of things that occur in the mind of a researcher in both the

situation in which the new hypothesis does fit the old evidence and the one in

which it doesn’t.

When a hypothesis explains some old

evidence, what the researcher affirms is that, in the term Pr(E/H&B), the

evidence fits the hypothesis, the hypothesis fits the evidence, and the

background assumptions can be integrated with the hypothesis in a comprehensive

way. The researcher is delighted to see that committing to this hypothesis, and

the theory underlying it, will provide reassurance that the old evidence did

happen in the way in which the researcher and her colleagues observed it. In

short, they can feel reassured that they did the work well. The researcher

did not make any mistakes. The researcher really did see what she thought she

did.

Fear of making an observation mistake haunts

scientists. It's reassuring for them when they more confidently can tell

themselves that they didn't mess up.

All these logical and psychological

factors raise the researcher’s confidence that this new hypothesis, and the

theory behind it, must be right when he sees that it explains problematic old evidence.

This insight into the workings of Bayesian

confirmation theory becomes even clearer when we consider what the researcher

does when she finds that a hypothesis does not successfully account for the old

evidence. In research, only rarely does a researcher in this situation simply

drop the new hypothesis. Instead, the researcher usually examines the

hypothesis, the old evidence, and her background assumptions to see whether any

of them may be adjusted, using new concepts involving newly proposed variables

or closer observations of the old evidence, so that all the elements in the

Bayesian equation may be brought into harmony again. The researcher gives the

hypothesis thorough consideration. Every chance to prove itself.

When the old evidence is examined in light

of the new hypothesis, if the hypothesis successfully explains that old

evidence, the researcher’s confidence in the hypothesis and confidence

in that old evidence both go up. Even if prior confidence in that old evidence

was really high, the researcher can now feel more confident that she and her

colleagues – even ones in the distant past – did observe that old evidence

correctly and did record their observations well.

The value of this successful application

of the new hypothesis to the old evidence may be small. Perhaps it raises the E

in the term Pr(E/H&B) only a fraction of 1 percent. But

that is still a positive increase in the value of the whole term, and therefore

it supports the hypothesis/theory being considered.

Meanwhile, Pr(H/E&B), i.e.

the scientist’s degree of confidence in the truth of the new hypothesis, also

goes up another notch as a result of the increase in her confidence in the old evidence.

A scientist, like all of us, finds reassurance in the feeling of mental harmony

that comes when more of her perceptions, memories, and concepts about reality

are brought into consonance with each other. (She feels

relieved whenever her cognitive dissonance drops a bit.)

A human mind experiences cognitive

dissonance when it keeps observing evidence that does not fit any of its

models. A person attempting to explain old evidence that is inconsistent with

his worldview often clings to his background beliefs and shuts out the new

theory his colleagues are discussing. He keeps insisting that this new evidence

can’t be correct. Some systemic error must be leading other researchers to

think they have observed E, but they must be mistaken. E is

not what they say it is. “That can’t be right,” he says.

In the meantime, his subversive colleague

down the hall, even if only in her own mind, is arguing “I know what I saw. I

know how careful I’ve been. E is right. Thus, the probability

of H, at least in my mind, has grown. It’s such a relief to

see a way out of the cognitive dissonance I’ve been experiencing for the last

few months. I get it now. Wow, this feels good!” Settling a score with a

stubborn bit of old evidence that refused to fit into any of a scientist’s

models of reality is a bit like finally whipping a bully who picked on her in

elementary school – not really logical, but still very satisfying.

Normally, testing a new theory involves devising

a hypothesis based on that theory and then doing an experiment that will test

the hypothesis. If the experiment delivers the evidence that was predicted by

the hypothesis, but not predicted by my background concepts, then the theory

that the hypothesis is based on seems to me more likely to be true.

But I may also decide to try to use a

hypothesis and the theory it is based on to explain some problematic old

evidence. If I find that the theory does explain that problematic old evidence,

what I’m confirming is not just the hypothesis and its base theory. I have also

found a consistency between the old evidence, the new theory/hypothesis, and

all or nearly all of my background concepts. (Sadly, it is likely that I will

have to drop a few of my old ways of thinking to make room for the new theory.)

Levitation (Is it real?)

(credit: Massimo Barbieri, via Wikimedia Commons)

This is why a new theory/hypothesis

explaining some problematic old evidence so deeply affects how much we believe

in the new theory. Our human feelings are engaged and reassured when the new

theory relieves some of our cognitive dissonance. The exhilaration we feel

mostly isn’t logical. But it is human.

And no, it is not obvious that evidence

seen with my own eyes is ever 100% reliable, not even if I’ve seen a particular

phenomenon repeated many times. Neither my familiar background concepts nor the

sense data I see in everyday experiences are trusted that much. If they were,

then I and anyone who trusts gravity, light, and human anatomy would be unable

to watch a good magic show without having a nervous breakdown. Elephants

disappear, men float, and women get sawn in half. If my most basic concepts

were believed at the 100% level, then either I’d have to gouge my eyes out or

go mad.

But I know the magic is a trick of some

kind. And I choose, for the duration of the show, to suspend my desire to

harmonize all my sense data with my set of background concepts. It is supposed

to be a performance of fun and wonder. If I explain how the trick is done, I

ruin my grandkids’ fun …and my own.

It’s important to point out here that the

idea behind H&B, the set of the new hypothesis/theory plus my

background concepts, is also more complex than the equation can capture. This

part of the formula should be read: “If I integrate the hypothesis into my

whole background concept set.” The formula attempts to capture in symbols

something that is almost not capturable. This is because the point of positing

a hypothesis, H, is that it doesn’t fit neatly

into my background set of beliefs. It is built around a new way of

comprehending reality and thus, it will only be fully integrated into my old

background set of concepts and beliefs if some of those old concepts are

adjusted by careful, gradual tinkering, and some are removed entirely.

Similarly, in the term Pr(H/E&B),

E&B is trying to capture something no math term can capture. E&B is

trying to say: “If I take both the evidence and my set of background beliefs to

be 100% reliable.”

But that way of stating the E&B part

of the term merely highlights the issue of problematic old evidence. This

evidence is problematic because I can’t make it consistent

with all of my background concepts and beliefs, no matter how I tinker with

them.

All the whole formula really does is try

to capture the gist of human thinking and learning. It is a useful metaphor;

but we can’t become complacent about this formula for the Bayesian model of

human thinking and learning any more than we can become complacent about any of

our concepts. And that thought is consistent with the spirit of Bayesianism. It

tells us not to become too blindly attached to any of our concepts, not even

how we think about how we think. Any of them may have to be updated and revised

at any time.

For all these reasons, the criticism of

Bayesianism which says it can’t explain why we find a fit between a hypothesis

and some problematic old evidence reassuring turns out not to be a fatal

criticism at all. It is more a useful mental tool, one that we may use to

deepen our understanding of the Bayesian model.

The Bayesian model tells us to accept that

all the patterns of neuron firings in the brain – i. e. all the hypotheses, bits

of evidence, and background concepts – are forming, reforming, aligning,

realigning, and floating in and out of one another all the time – even concepts

as basic as the ones we have about gravity, matter, space, and time. This whole

view of “Bayesianism” arises if we simply apply Bayesianism to itself.

In short, Bayesianism says we keep

adjusting our thinking until we die.

The Bayesian way of thinking about our own

thinking requires us to be willing to float all our concepts, even our most

deeply held ones. Some are more central, and we use them more often with more

confidence. A few we may believe almost absolutely. But none of our concepts is

irreplaceable.

For humans, the mind is our means of surviving.

Thus, it will adapt to almost anything. Let war, famine, plague, economics, and

technology do what they will. Rattle our living styles and ways until they

crumble. We adjust. We go on.

We gamble heavily on the concepts we

routinely use to organize our sense data and memories of sense data. I use my

concepts to organize the memories already stored in my brain, and the new sense

data that are flooding into my brain, all the time. I keep trying to learn more

concepts – including concepts for organizing other concepts – that will enable

me to utilize my memories more efficiently to make faster, better decisions and

to act more and more effectively. In this constant, restless, searching mental

life of mine, I never trust anything absolutely.

But I choose to stand by my most basic concepts

even at a magic show, not because I am certain they’re right, but because

they’ve been tested and found effective over so many trials for so long that

I’m willing to keep gambling on them. At least until someone proposes something

even more promising to me. I don’t know for certain that the theories of the

real world that my culture has programmed into me are sure bets; they just seem

very likely to be the most promising options available to me now. And I need some

theories about space, matter, etc. every day. I have to see and act. I can’t live

by sitting catatonic.

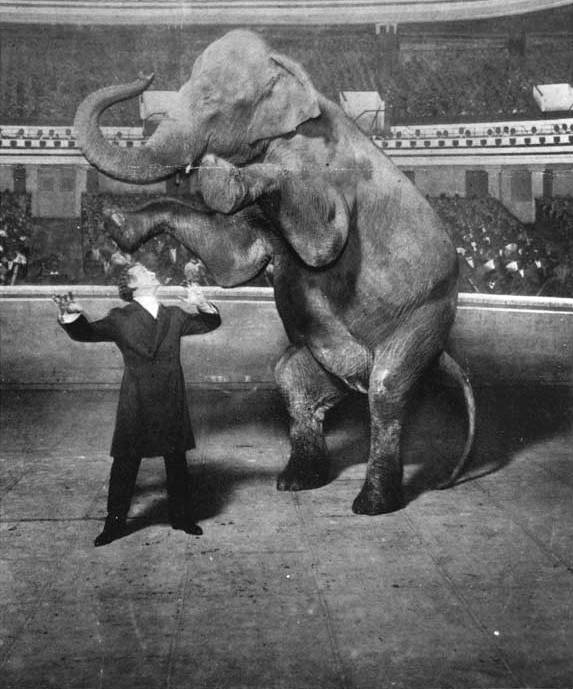

Harry Houdini

with his “disappearing” elephant, Jennie

(credit:

Wikimedia Commons)

Life is constantly making demands on me to

move and keep moving. I have to gamble on some models of reality just to live

my life; I go with my best horses, my most successful and trusted concepts. And

sometimes I change my mind.

This flexibility on my part is not

weakness or lack of discipline; it is just life. Bayesianism tells us Kuhn’s

thesis in The Structure of Scientific Revolutions. We are

constantly adjusting all our concepts as we try to make our ways of dealing

with reality more effective.

And when a researcher begins to grasp a

new hypothesis and the theory it is based on, the resulting experience is like

a religious “awakening” – profound, even life-altering. Everything changes when

we accept a new model or theory because we change. How we perceive and think

changes. In order to “get it”, we have to change. We have to eliminate some old

beliefs from our familiar background belief set and literally see in a new way.

And what of the shifting nature of our

view of reality and the gambling spirit that is implicit in the Bayesian model?

The general tone of all our experiences tells us this overall view of our world

and ourselves – though it may seem scary, or maybe, for confident individuals, exhilarating

– is just life.

We have now arrived at a point where we

can feel confident that Bayesianism gives us a good base on which to build

further reasoning. 100% reliable? No. But solid enough to use and so to

get on with all the other thinking that must be done. It can answer its critics – both those who

attack it with real-world counterexamples and those who attack it with pure

logic. And it outperforms both Rationalism and Empiricism every time.

Bayesianism is not logically unshakable.

But in a sensible view of our world and ourselves, Bayesianism serves well.

First, because it makes sense when it is applied to our real problem-solving

behavior; second, because it works even when it is applied to itself; third, because

we must have a foundational belief of some kind in place in order to get on

with building a universal moral code; and, fourth, because – as was shown

earlier – we have to build that new code. That task is crucial. Without a new

moral code, we aren’t going to survive.

We are now at a good place to pause to

summarize our case so far. The next chapter is devoted to that summing up.

Notes

1. Thomas

Kuhn, The Structure of Scientific Revolutions (Chicago: The

University of Chicago Press, 3rd ed., 1996).

No comments:

Post a Comment

What are your thoughts now? Comment and I will reply. I promise.